Blog

Details matter: Insights from day two of the What Works Hub for Global Education annual conference

Natasha Ahuja and Pedro Freitas

By the second day of the What Works Hub for Global Education 2024 annual conference, there was one thing that everyone agreed on: how we put programmes into action matters as much as the ideas themselves. It’s not enough to have good ideas—we need to make sure they are implemented well.

The point became clear just by looking at who was in the room: a unique mix of researchers, practitioners, donors, and policymakers. The need for all these groups to be in the same room was obvious. Combating the global learning crisis cannot happen if the sector works in silos; having this diverse group together was both rare and important.

The second session of the day kicked off showcasing various programmes, each highlighting how key implementation is to success.

Day 2: Session 2 – Under the hood: Understanding implementation details of effective pedagogical programmes (© What Works Hub for Global Education 2024)

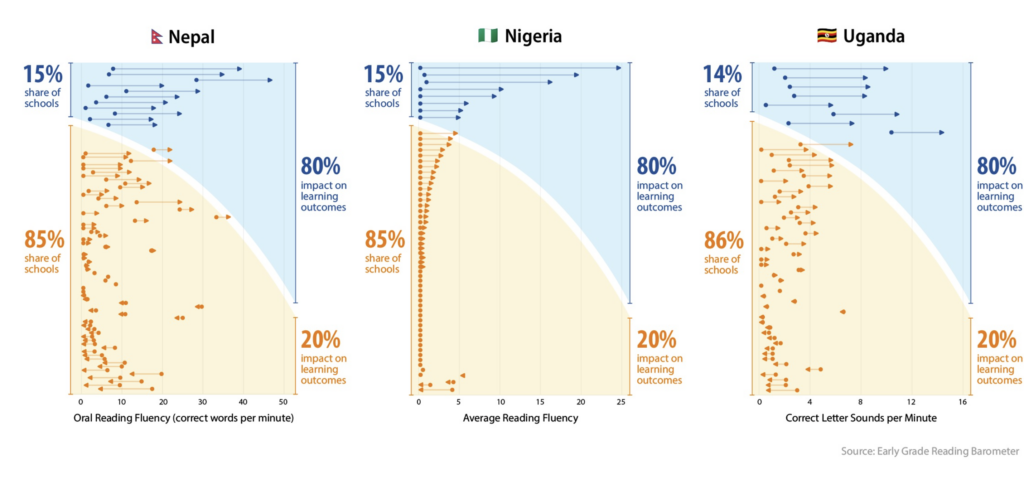

One study from Haiti by TJ D’Agostino from the University of Notre Dame and coauthors demonstrated this well.1 The programme, an early grade reading intervention, was successful overall, but much of its success came from a small number of sites. The main factor? Dosage—how much time the programme was actually put into practice at the site. By tracking implementation, they were able to see that in many schools the programme was implemented less frequently than intended as teachers often alternated between using the programme materials and their previous practices.2 As a result, the programme worked well in some sites but not so much in others. This was also observed during an early grade reading programme in Nepal where 80% of the results came from just 15% of the schools.

Figure 1: Learning gains by site for some early grade reading programmes

Source: RTI International

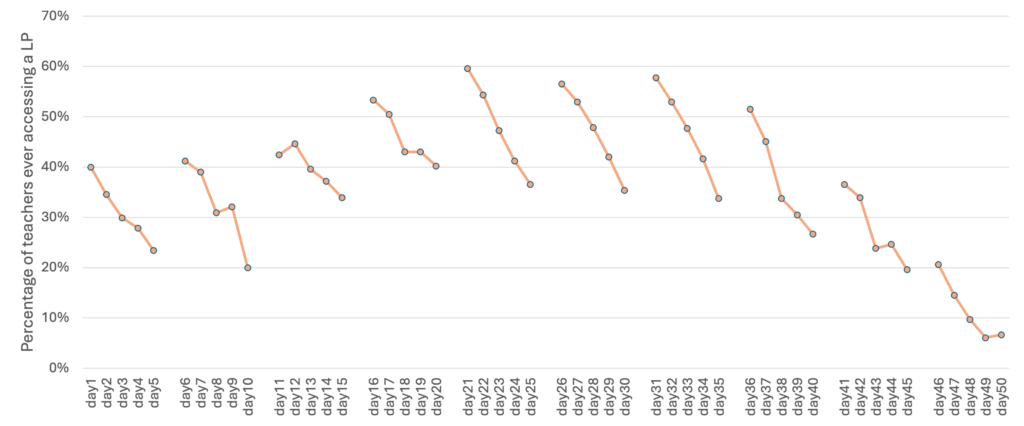

Stephen Taylor from the South African Department of Basic Education then showed how using backend data from EdTech tools can be useful to track implementation carefully and could help us better understand impact evaluation results.3 For instance, he found that classroom fidelity measured by the percentage of lessons accessed, revealed a distinct seasonality in the usage of curriculum materials. Curriculum implementation was higher at the start of every week than in the end, weakened later in the school term, and dropped even further in the second year of the project. Interestingly, traditional data collection methods, such as teacher surveys, reported higher levels of material usage than what the app data showed. Insights such as these might have been missed without leveraging backend data.

Figure 2: Percentage of lessons accessed during a term

Another example from Guthrie Gray-Lobe from the Development Innovation Lab at the University of Chicago showed that simply reducing the complexity of teacher guides made a huge difference in how well teachers could carry out the programme.4 Less complexity led to better outcomes (0.17 standard deviations on average).5 Merely modifying the messaging to make it simple and clear might seem like a small detail, but it is also applicable beyond this programme, including in facilitating evidence update by policymakers.Figure 3: A modified version of a teaching guide

Source: Video of the conference session

To continue improving interventions, we need to gather more evidence, particularly in contexts where data is scarce. Daniel Iddrisu from the Research for Equitable Access and Learning (REAL) Centre, University of Cambridge highlighted this with his work, showcasing a data repository compiled by the REAL Centre and Education Sub-Saharan Africa (ESSA). He stressed the importance of filling gaps in evidence, especially regarding early childhood development, children’s socio-emotional growth, and teaching quality.

Daniel Iddrisu, University of Cambridge, REAL Centre (© What Works Hub for Global Education 2024)

In short, the big takeaway from the session was that success lies in the details. What happens in the classroom is central to the impact we want to see, and we need to measure and refine how programmes are delivered. While it’s clear that good implementation is critical, the challenge lies in measuring it consistently across different programmes and contexts.

Conversations between researchers, practitioners, and policymakers are key to bridging this gap between theory and practice—and that’s why being in the same room matters so much.

Watch video of the full session on YouTube.

Footnotes

- The authors used a mixed-methods approach to explore the contextual factors that explain different results of a structured early-grade reading program in 350 schools in five regions in Haiti.

- The other implementation factors highlighted include (1) challenges due to teachers’ poor academic backgrounds, and (2) geography, as teacher coaching sessions struggled to reach very remote rural areas of Haiti.

- The results come from a structured pedagogy intervention from South Africa. This programme was delivered via an app and tablets distributed to 600 teachers, featuring daily lesson plans for grades 1-3 during 2022 and 2023. The app usage data provided rich insights into how teachers engaged with the materials.

- There were two experiments conducted in a private school network in Kenya, wherein they provided teachers with less detailed materials, giving them more discretion.

- This was most effective in larger classrooms, where it also led to a reduction in the dispersion of students’ results.

Ahuja, N., and Freitas, P. 2024. Details matter: Insights from day two of the What Works Hub for Global Education annual conference. What Works Hub for Global Education. 2024/007.

https://doi.org/10.35489/BSG-WhatWorksHubforGlobalEducation-BL_2024/007

Discover more

What we do

Our work will directly affect up to 3 million children, and reach up to 17 million more through its influence.

Who we are

A group of strategic partners, consortium partners, researchers, policymakers, practitioners and professionals working together.

Get involved

Share our goal of literacy, numeracy and other key skills for all children? Follow us, work with us or join us at an event.