Blog

5 insights from a survey on stakeholders’ evidence needs

Madhuri Agarwal, Michelle Kaffenberger and Lily Kilburn

Generating high-quality education research and synthesis is only half the battle. That evidence can only make a difference if it’s actually used by governments, funders, practitioners and other researchers.

Evidence is more likely to be taken up if it focuses on topics that are important to stakeholders. It also has to be presented in the right format for stakeholders’ needs. So, we at the What Works Hub for Global Education decided to ask a diverse group of stakeholders about their priorities for research, synthesis and evidence translation.

What education topics were most important to these stakeholders? What do they need from evidence in education?

A new insight note explores the results in depth, and we’ll present some key points here.

The study

We conducted a survey of 146 respondents from government, non-profit and civil society organisations, funding organisations and research organisations. 40% of respondents were based in the Global South, and all were working toward improving foundational learning in low- and middle-income countries.

What did the survey show? Here are five takeaways that have significant implications for anyone working on education research and synthesis.

1. Implementation, teachers and scaling are high priorities

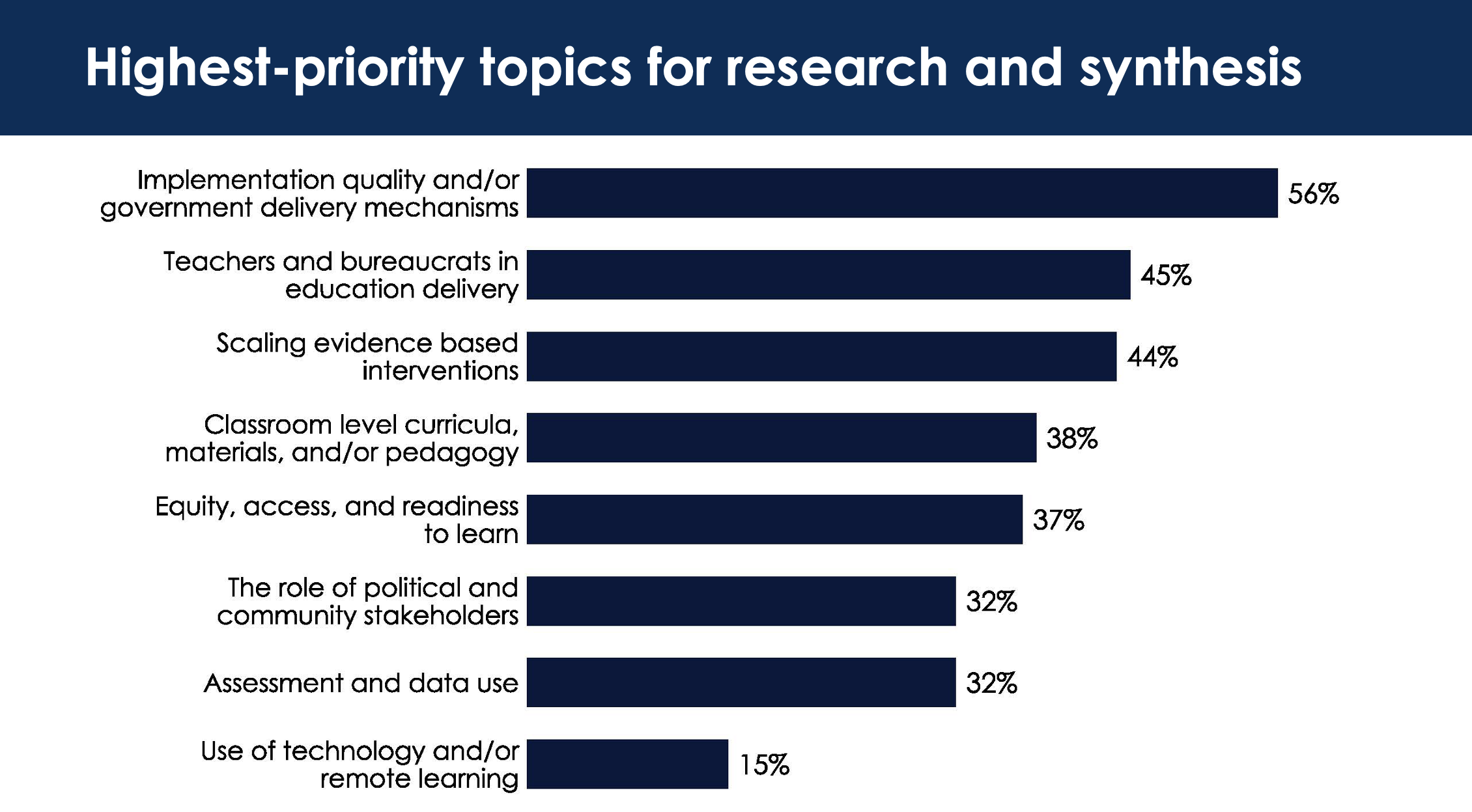

Respondents were asked to rank a list of education research topics by priority. If they ranked a topic within their top three, it was considered a high priority for them.

Which topics were high priorities for the most respondents overall?

- ‘Implementation quality and/or government delivery mechanisms’ – 56%

- ‘Teachers and bureaucrats in education delivery’ – 45%

- ‘Scaling evidence-based interventions’ – 44%

- ‘Classroom-level curricula, materials, and/or pedagogy’ – 38%.

Implementation quality and delivery mechanisms were a high-priority topic for over half of respondents.

2. Stakeholder groups sometimes have markedly different priorities

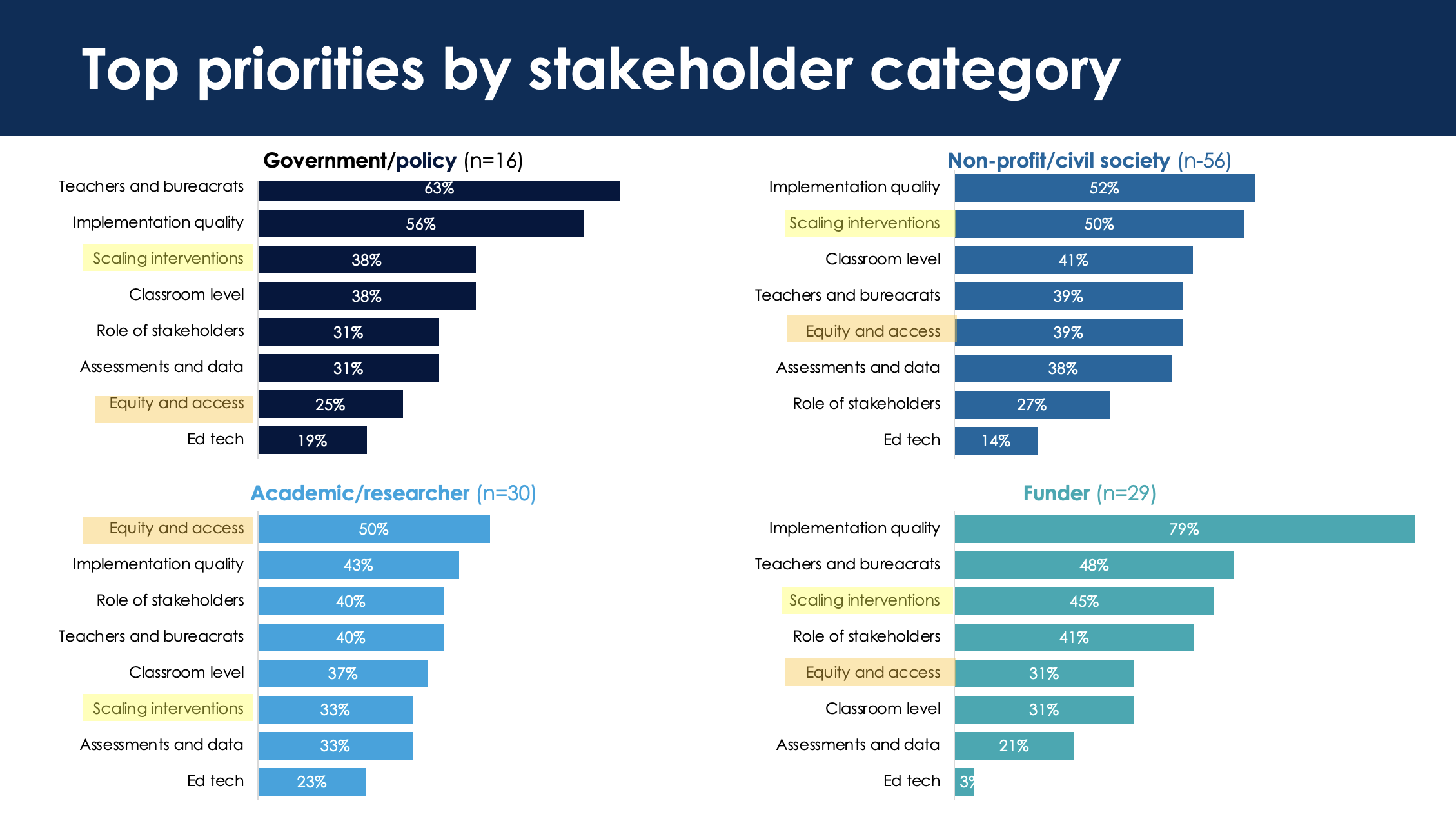

There were some notable differences in priorities.

‘Scaling evidence-based interventions’ was a high priority for respondents from non-profits/civil society organisations, funding organisations and governments, but it was ranked as a low priority by academics.

On the other hand, ‘equity, access, and readiness to learn’ was a priority topic for academics but ranked relatively low by all other stakeholders.

Note: Scaling interventions is highly ranked by all stakeholders except academics and researchers; equity and access is ranked relatively low by all stakeholders except academics and researchers.

3. Respondents care about implementation

Within their top four priority topics, respondents were asked to rank sub-topics in order of priority.

Many of the responses indicated interest in implementation science. Respondents wanted research and synthesis on the themes of:

- measuring implementation

- improving implementation fidelity, and

- designing and adapting for implementation at scale.

Other key priorities included ‘improving teaching quality through both pre-service and in-service training’ and ‘improved instructional materials.’

4. Different groups want different types of evidence outputs

It’s no surprise that different stakeholder groups mostly want different forms of research and synthesis outputs. Understanding these different needs is important for tailoring outputs.

‘Practical guidance to inform the design and implementation of a programme’ stood out in the survey’s results because it was a high priority for all groups. That’s an important takeaway for anyone generating evidence in education.

Some notable differences in priorities:

- ‘Examples of successful scale-ups’ were highly prioritised by all stakeholder groups except government stakeholders, who ranked this as their lowest priority.

- ‘Formal synthesis of the latest academic evidence’ was prioritised by most groups but ranked second-to-last by funders.

Interestingly, stakeholders generally agree about how and why they use evidence.

86% said that building their own knowledge or expertise on a topic was a high priority. Communicating evidence to stakeholders and informing the design of new policies, programmes or initiatives were also important uses of evidence.

5. Respondents need synthesis outputs to be less academic and more focused on practical use in different contexts

The survey included an open-ended question about the shortcomings and limitations respondents have experienced with existing synthesis and evidence translation efforts or outputs.

Generally, they felt that outputs needed to give more actionable, detailed information about education interventions or programmes. They wanted to know what this research meant for them and their own contexts.

Often, outputs were too academic and complex, making them impractical for use by busy policymakers, practitioners and implementers. Outputs needed to give practical guidance that was shorter, free of jargon and aware of factors like cost-effectiveness.

What next?

These are crucial findings for researchers who want to create a culture of evidence use.

Evidence outputs need to be responsive to audiences’ needs if they’re going to have real impact. That may mean researchers will have to learn to present their findings in different ways to make them useful. These results also emphasise the importance of thinking about demand for evidence and, as always, listening to those on the ground and in the countries on which research focuses.

At the What Works Hub for Global Education, we aim to improve global learning by helping evidence get where it needs to go – into government policy, large-scale reforms and implementation in classrooms. We’ll be keeping the insights from this survey in mind as we continue our work on evidence use, evidence translation and implementation science.

If you’re passionate about evidence uptake, too, why not sign up to our newsletter to stay updated? That’s also how you can hear about events like our annual conference.

In addition, you can express your interest in joining our Community of Practice, which has over 500 members spanning 30 countries and 6 continents.

Agarwal, M., Kaffenberger, M. & Kilburn, L. 2025. 5 insights from a survey on stakeholders’ evidence needs. What Works Hub for Global Education. Blog. 2025/007. https://doi.org/10.35489/BSG-WhatWorksHubforGlobalEducation-BL_2025/007

Discover more

What we do

Our work will directly affect up to 3 million children, and reach up to 17 million more through its influence.

Who we are

A group of strategic partners, consortium partners, researchers, policymakers, practitioners and professionals working together.

Get involved

Share our goal of literacy, numeracy and other key skills for all children? Follow us, work with us or join us at an event.